Aquifer thermal energy storage systems can provide a heat source in the winter by extracting warm water from a subsurface reservoir. The extracted fluid cools as it passes through a heat exchanger and is then injected into a colder aquifer. The cold fluid can provide a source of cooling in the summer, absorbing heat rejected from buildings. In turn, the extracted cold fluid heats up before being used to recharge the hot aquifer for the following winter.

These systems often operate on sites where the heating demand differs from the cooling demand. The net addition or removal of thermal energy from the subsurface causes the mean temperature of the system to drift. The imbalance in demand may be provided by either extracting fluid from the aquifer at a different flow rate in the summer and winter, or by using a different temperature change of the extracted aquifer fluid as it passes through the heat exchanger during the summer and winter cycles. A recent article by Emma Lepinay and professor Andy Woods explores the difference between these two modes of operation.

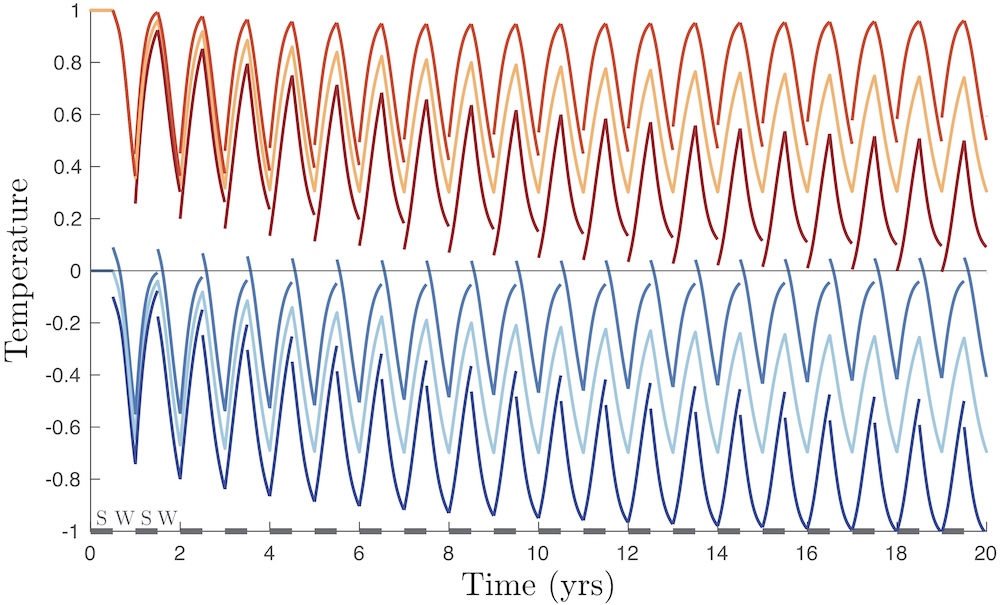

The article shows that the extraction and injection of different fluid volumes eventually causes the temperature of the reservoir with a net loss of fluid to adjust to the far-field temperature of the subsurface, while the temperature of the other reservoir, with a net addition of fluid, approaches the injection temperature. Conversely, a greater (or smaller) temperature change at the heat exchanger in the winter compared to the summer leads to a gradual cooling (or heating) of both reservoirs.

This article has been recently published in Elsevier Renewable Energy, and you can read it here. This recent work builds on previous work on thermally-balanced ATES systems, and you can read more about that here.